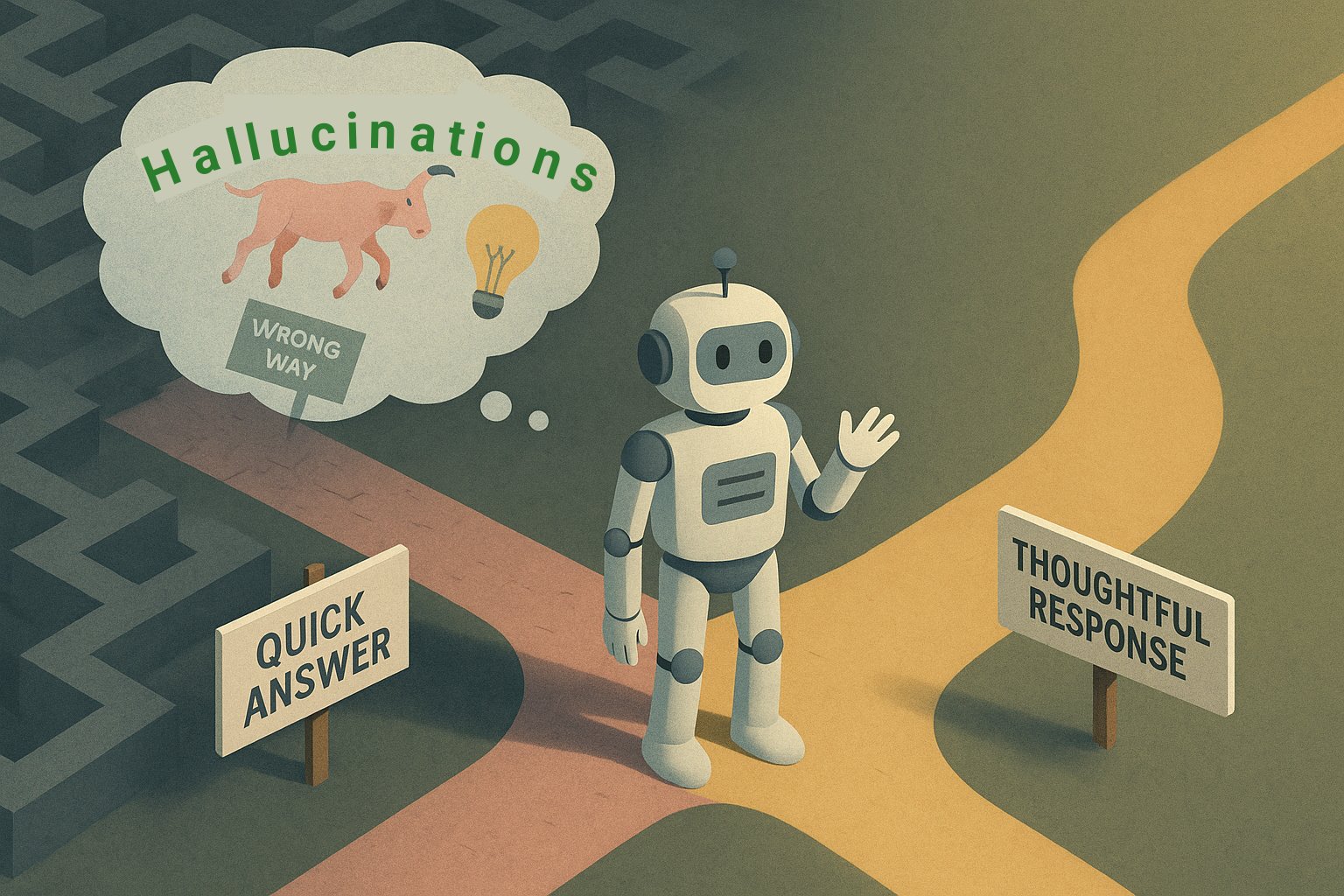

If your AI prompts are asking LLMs for super-short answers, you could be setting yourself up for trouble.

New research reveals that demanding brief responses from large language models (LLMs) like GPT-4 turbocharges the risk of hallucinations — wrong, made-up, or misleading outputs.

And for marketers, writers, and content creators who rely on AI daily, that’s a warning you can’t afford to ignore.

New Research: Short Answers, Big Problems

A Hugging Face and Stanford team recently published the PHaRE study (“Prompting Hallucination with Reasoning Errors”). Their deep dive into chatbot behavior uncovered something startling:

- Short AI prompts and requests for short answers sharply increased hallucination rates.

- For some top models, hallucinations spiked by as much as 40% when users asked for quick replies.

- Even best-in-class models like GPT-4 and Claude-3 Opus were tripped up by overly compressed exchanges.

As TechCrunch put it: “Short answers often equal wrong answers.“

Why? When AI is forced to compress complex reasoning into a tiny space, it often cuts corners, skips verification steps, and “guesses” to be fast and brief.

The result? Hallucinated facts. Misleading information. Errors that can tank your credibility.

Why AI Prompts Matter for Marketers & Writers

Marketers, writers, and content creators are especially vulnerable. You might be tempted to push for speed — “Give me a two-line answer!” “Summarize this in five words!”

But if you’re relying on AI for research, content ideas, social media posts, or even SEO optimization, hallucinated content can cause serious damage:

- Mislead your audience with incorrect information.

- Erode trust in your brand or publication.

- Hurt SEO rankings if fact-checking errors slip through.

And in the fast-moving, AI-powered content world, credibility is everything.

What You Should Do Instead

Here’s how to outsmart the risk and still work efficiently with AI prompts:

- Ask for slightly longer, reasoned answers. Instead of “Explain in two words,” try “Explain briefly, in 3-5 sentences.”

- Invite the model to think step-by-step. Prompt with phrases like “Walk me through the logic briefly” or “List the key points with reasoning.”

- Double-check quick responses. If you get a short answer, ask a follow-up question or verify the information externally.

The Bottom Line

In the race for speed, don’t sacrifice accuracy. Short prompts might seem efficient, but they’re a shortcut to hallucination — and hallucinations hurt your brand, your message, and your reputation.

Slow down just enough to get better outputs.

The research is clear: when you give AI room to reason, you get smarter, safer, and more trustworthy content.

Stay credible. Prompt smarter. Write better.

By Jeff Domansky, Managing Editor

Recent AI Marketer Tools Blog posts:

You’re Not Alone: 43% of Marketers Still Can’t Find AI Value